David Casacuberta, Saray Ayala and Jordi Vallverdú

Embodying cognition: A Morphological Perspective

Abstract

After several decades of success in different areas and numerous effective applications, algorithmic Artificial Intelligence has revealed its limitations. If in our quest for artificial intelligence we want to understand natural forms of intelligence, we need to shift/move from platform-free algorithms to embodied and embedded agents. Under the embodied perspective, intelligence is not so much a matter of algorithms, but of the continuous interactions of an embodied agent with the real world. In this paper we adhere to a specific reading of the embodied view usually known as enactivism, to argue that 1) It is a more reasonable model of how the mind really works; 2) It has both theoretical and empirical benefits for Artificial Intelligence and 3) Can be easily implemented in simple robotic sets like Lego Mindstorms (TM). In particular, the authors will explore the computational role that morphology can play in artificial systems. They will illustrate their ideas presenting several Lego Mindstorms robots where morphology is critical for the robot’s behaviour.

Keywords: multiple realizability, morphological computation, enactivism, cognitivism, XOR.

1. From symbols to bodies

Artificial Intelligence (AI) can be approached just with an engineering frame of mind, looking for algorithms that work and are able to solve a problem. However, one can settle to a philosophical one too, and consider AI a conceptual tool to get better insight on what the mind is and how it works. Within this frame of mind, just solving problems is not enough: we want our theory to have, to a certain degree, psychological reality. We want our model to embed some of the earthly properties that human minds have. Currently, discussion is mainly around three main models concerning what the mind is: symbolic cognitivism, connectionism and the embodied mind. In this paper we adhere to the third model; in particular, to a special branch usually known as enactivism, to argue that 1) It is a more reasonable model of how the mind really works; 2) It has both theoretical and empirical benefits for AI; and 3) Can be easily implemented in simple robotic sets like Lego Mindstorms (TM).

Much has already been written about the differences between these three mind models, and which is the superior one. To our understanding, despite their success in creating models on subjects like mathematical reasoning, face recognition, visual perception or even creating artworks, both the cognitivist and the connectionist approaches have one major flaw which is of considerable philosophical importance: they cannot produce a credible account of the relationship between mind and world. Being local symbolic representations or distributed subsymbolic representations, both models are based on an abstract reconstruction of a specific domain of the physical world, both the selection and the way representations are connected to real life events and objects has been articulated beforehand by the cognitive system (Thompson 2007). Connectionism tries to generate a more plausible description of the mind, trying to better capture its neurological basis. This leads to a more dynamic account of representations: instead of being something stable, they are distributed along the whole system as well as self-organised, having certain co-variation with the environment. However, both symbolic cognitivism and connectionism consider the world and the mind as two completely different entities, with a very much regulated protocol of interaction.

The embodied mind shares some characteristics with connectionism. It also proposes a self-organised system and it is based on a dynamic approach. However, in this approach dynamicism has been extended to the correspondence between mind and world. Instead of having a simple coordinated correspondence between symbols (or subsymbols) and real life objects, the embodied mind paradigm is based in a non-linear causality system in which by means of sensorimotor integrations, brain, body and environment are continuously influencing one another, making it impossible to separate the three into clear-cut parts. In order to have such a system, it is basic that the cognitive entity has some sort of body that can obtain continuous information from the real world in order to co-vary and co-adapt with it (Thompson 2007). This is why the paradigm we are discussing is usually called the embodied mind. First of all we need to avoid the tendency to interpret the notion of embodiment in its weakest sense: that this, a mind needs a body. The embodied mind paradigm argues for something a lot stronger than that, that is, that mind is just the result of circular and continuous processes of causality between brain activity, body and environment, with no possibilities to make a clear distinction among then, nor a chance to build a theoretical model in which mind can be described autonomously from body and environment. (Pfeifer and Iida, 2005).

The particular reading of the embodied mind paradigm we adhere here, known as enactivism, is based on the following ideas (Varela, Thompson, Lutz, Rosch 1991):

1) Living beings are autonomous entities and are responsible for their own goals that are not just settled from the outside.

2) The nervous system is also an autonomous entity, which takes care and is responsible for keeping its own coherent and meaningful patterns.

3) Cognition is the skillful know-how that co-varies with environment and how it evolves. Every cognitive action is both situated and embodied.

4) Cognitive processes are not formally prespecified, but relational domains continually coupling with the environment.

A large amount of the literature takes living beings as the main metaphor. In their seminal book, Varela et al (1991) developed most characteristics of their model by analysing the way cells behave and represent environment. Nevertheless this shouldn’t be considered a vitalist model, defending that only living beings can achieve real consciousness. Continuous coupling with the environment and self-established goals are the only requirements, as it is shown in the aforementioned book when Varela et al. argues in favour of how relevant Brooks’ robots are, presenting them as artificial systems that have some of the main characteristics of an embodied mind (Brooks 1991).

In this work we will defend the enactive approach by exploring the critical role morphology plays in artificial systems. The structure of this work is as follows. First we will point out the benefits of the enactive approach. We will then explore the (in)compatibility between the embodied view and the multiple realizability of mental processes, digging into the debate between two different readings of the embodied perspective, a functionalist and a reductionist one. We will illustrate our explanation with a thought experiment (a robot computing the XOR function courtesy of its morphology), concluding that the functionalist stance does not really match with the enactive view. This thought experiment serves us as the inspiration for our own proposal: three robots that compute XOR courtesy of their morphology. Previous to introducing the robots, we will review our preceding research that constituted our first approximation to the possibility of morphology playing a role in computation.

2. The quest for enactive AI

Despite the fact that philosophers like Dreyfus (Dreyfus 1972; 1992) are convinced of an impossible gap between living and artificial beings that makes an activity like AI impossible, one can reverse the line of thought and attempt to discover if and how these key characteristics of living beings can be reproduced in artificial systems, either by means of simulations or robotics. This is what the enactive paradigm tries to understand. Following Froese and Ziemke (2009) we can state two main systemic requirements in order to be able to speak of enactive AI: autonomy and adaptativity. Despite mysterious claims (Flanagan 2009) these two properties are not beyond scientific and philosophical analysis and are not restricted to the realm of living beings, and can be satisfied in an artificial system.

Instead of trying to rapidly dismiss Heideggerian-based criticisms to current AI by the already mentioned Dreyfus, or more biologically based like Di Paolo and Izuka (2008) or Moreno and Exteberria (2005), we believe it is better to take this challenge seriously, and once all arguments and counterarguments are settled, it is clear that current approaches on AI which don’t include some sort of enactive/embodiment perspective face several challenges which need to be addressed.

One of these problems is what Dreyfus calls the Big Remaining problem, a problem closely related to what Dennett called the Frame problem (Dennett 1984). This problem refers to the (im)possibility of a formal model of a cognitive system to “directly pick up significance and improve our sensitivity to relevance” (Dreyfus, 2007). If artificial systems cannot get meaning from the outside, but rather are following a set of formal rules, we are missing the main characteristics that make an agent a real one, besides not being really useful in real life contexts, as the frame problem paradox presents. The problem with stating meaning in artificial systems is not simply adding sensors that connect to the environment. As we mentioned in the former section, the embodied mind paradigm implies more than just having a body. Following Di Paolo & Izuka (2008) as well as Froese & Ziemke (2009), getting motor systems and sensors into a loop is a necessary condition to have autonomy and adaptivity, but it is far from sufficient. As long as this feedback between environment and cognitive systems is imposed from the outside, we won’t have a real enactive system, which needs to set its goals from the inside.

The need of intrinsically posed goals is not only asked from the enactive perspective. It was stated as earlier as Kant (1790) and can be found in authors that defend a biological, darwinian approach to functionalism like Millikan (1991) or Flanagan (2009). Either from an a priori analysis of the concept of autonomy or trying to naturalise it, the consequence is largely the same: in order for a system that adapts to the environment to be autonomous, it has to be the system itself that sets the goals, not an outside element which postulates those criteria from the beginning. As the biosemantical model defended in Millikan (1991) states, this doesn’t imply any type of vitalism or mysterious positions. The fact that aims and plans of cognitive living systems are intrinsic can be explained by the process of natural selection.

Following the ideas stated in Froese & Ziemke (2009), we will present the basic methodological principles behind enactive AI. They are:

1) Methodology is viewed under scientific light and not as an engineering process. We want to understand mind, not only solve practical problems on face recognition or make guesses about the behaviour of the stock market.

2) Behaviour cannot be prefigured by formal guesses of how the world is and then be implemented in the system. Behaviour emerges of the continuous interactions between the system and its environment.

3) An optimal enactive AI system needs to find a balance between robustness criteria and flexibility, as one can see in the natural world.

What is the main difference between enactivism and plain embodiment? Despite the fact that Thompson (2007) uses both terms almost synonymously, we believe, following Froese & Ziemke (2009), that there are interesting differences between them. Basically, we will use it to distinguish it from a more general approach to the notion of embodiment, which seems to be content with arranging a closed sensorimotor loop that allows co-variation between internal models in the brain and the outside world. Although this is necessary, it is not sufficient, and in order to assure real autonomy from agents, more needs to be added to the system.

How can we develop AI that adapts to the enactive principles? The most feasible way -and probably the only one- is to forget completely about multiple realizability, the omnipotent power of Turing machines and include both the physical structure of the system -the body of the appliance shall we say- as well as the environment as key elements for computations. We will explore this in the next section.

3. Enactive AI? Morphology to the rescue!

In order to develop AI that adapts to the principles of the enactive framework, first we have to face the assumed multiply realizable nature of minds. The Multiple Realizability thesis (Putnam, 1967, MRT) has been for many years a good justification for the Cartesian-like methodology characteristic of the disciplines studying mind over the past decades (like philosophy of mind, psychology and cognitive science). This methodology operates under the assumption that mind can be explored and explained with no (or little) attention to the body. Putnam had conceptual and empirical arguments for MRT. They both constituted arguments against the Identity-Theory, a cutting-edge theory at the time that claimed that states and processes of the mind are states and processes of the brain (Place, 1956; Feigl, 1958; Smart, 1959). The conceptual argument originates from the assumption that the Turing machine is the right way to conceive minds. The empirical argument draws attention to the fact that mental states of the sort humans possess may be had by creatures that differ from us physically, physiologically, or neurobiologically. If we are willing to attribute to other creatures, like octopi or a potential extraterrestrial form of life, the same mental states that we have (e.g. pain or hunger), then we have to detach mind from a particular physical structure (e.g. human brain). The important criterion for mental sameness here is not a physical sameness, but a functional-sameness. Therefore, the particular matter that minded creatures are made of, and the particular body they have, is of minor importance when studying and explaining how their minds work.

This disembodied methodology has also been dominating in AI, again, because of some form of multiple realizability. As stated by the cognitivist paradigm, cognition has to do with algorithms (operating over representations), and, until recently (1980s), AI has been exclusively concerned with finding effective algorithms. Algorithms are platform-free, that is, the same algorithm can be implemented in different physical structures. Algorithmic minds are free from the (constraints of the) body.

However, MRT has been called into question. Recent works argue that evidence in favor of MRT as an empirical claim, is not as convincing as many philosophers have been claiming (Bickle, 1998, 2003; Bechtel & Mundale 1999; Shapiro 2000, 2004; Polger, 2002). It seems reasonable to say, for example, that in the same way that an octopus and I do not share the neural mechanisms underlying the psychological state of being hungry, we also do not share the same psychological state of being hungry (Bechtel & Mundale, 1999). Putnam’s MRT uses a coarse-grained analysis when referring to psychological kinds. This is a legitimate practice in science. But when it comes to considering brain states, Putnam uses a fine-grained analysis. When the same grain is employed at both levels (psychological and neurological), the picture we get is a different one. A coarse-grained analysis allows for similarities at both physical and psychological levels, while a fine-grained inspection drives us to the conclusion that particular mental processes might require particular physical structures. Neural plasticity, in its turn, has been alleged as evidence for MRT (Block & Fodor, 1972). For example, the capacity of the brain to process language sometimes in the right hemisphere is said to be evidence for MRT. We should be cautious, however, in concluding that. In those arguments, the emphasis is placed on the location of the mental activity, and not in the processes by means of which the mental activity is produced. The processing of language in the right hemisphere might be done in the same way (by means of the same processes) that it is done in the left hemisphere, therefore does not necessarily constitute an interesting case of multiple realizability. An interesting case involves different processes producing the same function. As long as the neural plasticity argument elaborates only on differences in location, it lends no support to MRT. And in response to Putnam’s conceptual arguments, we can just claim that the power of the Turing Machine metaphor, and in general of the computational functionalism developed by Putnam (1960, 1967), has been dismissed over the last years.

Our first conclusion can be, on the one hand, that as an empirical claim about minds, MRT cannot be used as a justification anymore, at least not in the unchallenged way that has dominated the scene until recently. On the other hand, as a foundation for a theoretical approach and methodology to develop artificial intelligent agents, operating under the assumption that mental processes are independent of the physical structure, it is unsatisfactory. We have seen that although successful in some domains, algorithmic AI is not providing us with an understanding of how natural forms of intelligence work.

The specific line of criticism against MRT that most affects our goal here is the one that, according to some, follows from accepting the tenets of the embodied mind program. We can find two different readings of the embodied mind view, corresponding to two very different senses of embodiment, and only one of them challenging MRT. A functionalist reading claims that body plays a computational role in cognition, although the physical details of implementation are not important (Clark, 2006; 2007; 2008). Under this interpretation, mental processes are still multiply realizable, but this time the implementational base spreads to include the body and the environment. A reductionist reading, however, defends that the details of implementation are a constraint on mind, and so, mental processes are not multiply realizable in different bodies and across different environments. Differences in morphology are going to make a difference in mental processes (Shapiro, 2004; Noë, 2004).

The reductionist reading advocates for a more radical interpretation of the embodied view, which may develop into a paradigm alternative to the representationalist and computationalist models of mind. The notions of coupling, action and sensorimotor coordination are contrasted with functional, computational and information-processing. The functionalist reading, nevertheless, proposes a reconciliatory picture, and the new notions from the embodied program are integrated in the (old) functionalist model of the mind, where representations and computations are still the keystone of cognition, and mental processes keep their platform-free privilege. But now the body and the environment are as important participants as the brain is.

The reductionist interpretation matches with the enactive trend within cognitive science. A good illustration of this fact is the sensorimotor approach to perception developed in O´Regan & Noë (2001) and Noë (2004). According to this approach, perception consists of the implicit knowledge we have of sensorimotor regularities, that is, the relations between movement and change, and sensory stimulation (what is called sensorimotor dependencies). Perception here is a matter of actions, not internal representations. The range of actions an organism performs has to do, in turn, with the sort of body it has. It follows from this that differently embodied organisms, engaging in different sensorimotor loops with the world, are going to perceive the world in different ways. An organism with two eyes separated by 180º (one in what we would call the front, the other in the back) will engage in different sensorimotor loops when visually perceiving an object. Its gross-bodily and eye movements will relate to visual input in a way that differs from the way we humans relate to visual input. Thus, this approach to perception has the (radical) consequence that the particularities of our bodies are a constraint on how we perceive.

The functionalist reading of the embodied mind program defends, as we said, that the fine-grained details of an organism’s body are not a constraint on mind. In particular, they do not determine how we perceive. Although embodiment, action and embedment are significant features when we consider thought and experience, the (embodied) functionalist says, their contributions are more complex than a mere direct relation. There is a buffer zone between the fine details of body and motion-dependent sensory input, and the internal representations that determine perception. Perception ultimately depends on representations and computational processes that are insensitive to the fine details of the world-engaging sensorimotor loops. The specific sensorimotor dependencies are only the contingent means to pick up external information. It is this higher level of information-processing what determines experience. Thus, differently embodied organisms, interacting with objects in different ways, could, in principle, have the same perceptual experience, as long as they have access to the same gross information and then can form the same internal representations.

The sensorimotor approach to perception relates mental processes, in particular, perception to action, bringing mentality out of the realm of internal representations. This contrasts with the (less radical) view we just mentioned, also within the embodied mind paradigm, where perception, and in general mental processes, are still a matter of (internal) representations. For this reason, the sensorimotor approach to perception provides us with a good starting point to figure out how an enactive AI should be. And it does so not only because of its strong points, but also for its limitations. Sensorimotor loops by themselves do not allow us to talk of an agent’s intentional action (other than metaphorically). A notion of selfhood or agency is needed (Thompson, 2005). A detailed analysis of how to solve this lack is specified in Froese & Ziemke (2009). Here, we are only concerned with one of their conclusions: the aboutness of our cognition “is not due to some presumed representational content that is matched to an independent external reality (by some designer or evolution)” (ibid, p. 33), but has to do with the continuous activity of the organism. Life and (its corollary) movement are here in a continuum with mind.

In order to develop an enactive AI, we need to rely on this more radical (reductionist) interpretation of the embodied program. Hence, in exploring how we can bring the enactive approach to the AI lab, firstly, we need to ignore the multiple realizability of natural minds (Putnam’s MRT) and algorithms, and focus our attention on how to develop systems that inhabit their (particular) bodies that, in turn, inhabit their (particular) environments. This will provide our AI projects with better results and, more importantly, with a better understanding of how (natural) organisms interact with their environment. At this point, the brain-in-a-body (controller-in-an-actuator) caricature that used to rule the mind sciences disappears. The clean division between mechanical design and controller design is, therefore, no longer useful. Natural organisms evolve with particular bodies in particular environments, and exploit the potentialities of them. Since intelligent behaviour is not the result of a pre-programmed set of functions instructing a passive body, in order to build intelligent robots we need to explore the many ways natural organisms exploit their physical structures and their surroundings, and how intelligent behaviour emerges from that. The goal for enactive AI is not to simulate the abstract operations happening in the brain (algorithmic AI), but the physical interactions between the whole organism (with its particular body) and its environment.

It is time to explore the potential of embodiment in artificial systems. We will do that by means of the notion of morphological computation. This notion was first introduced and explained by Chandana Paul (2004), and refers to the phenomenon of the bodily details taking charge of some of the work that, otherwise, would need to be done by the controller (be it the brain or a neural network). That is, computation obtained through interactions of physical form. We will introduce this notion in more detail with a particular example that in turn will serve us as the inspiration for our own proposal.

Paul (2004, 2006) offers an example of morphological computation, where a robot controlled by two perceptrons (Rosenblatt, 1962) gets to exhibit, courtesy of its morphology, a XOR-like behaviour. The possibilities of the morphology of an agent’s body have been exploited in different ways in the study of adaptive behavior. There are several examples of robots and vehicles that try to make the most of the details of embodiment in order to minimize the control required (Brooks, 1999; Braitenberg, 1984). Paul’s robot consists of two perceptrons as controllers. Perceptrons are very simple connectionist networks consisting of two layers (an input layer and an output layer), modifiable connection weights and a threshold function.

Perceptrons can compute functions as (inclusive-) OR and AND, but not complex ones such as exclusive-or, also written as XOR (Minsky & Papert 1969) (See fig.1). Perceptrons learn to generalize on the basis of physical similarity. If a connectionist network has been trained to classify pattern 111000, then it will tend to classify a novel pattern 111001 in a similar way, on the basis of the (physical) similarity (or similarity of the form) between these two patterns. Perceptrons, then, are suitable to process linearly separable functions, as (inclusive-) OR and AND (see fig. 2 & 3), but not linearly inseparable ones such as XOR. To compute XOR we need an extra node or two (hidden units) between the input and the output layers (see fig. 4). This hidden unit becomes active when both inputs are active, sending a negative activation to the output unit equivalent to the positive activation that it receives from the inputs units.

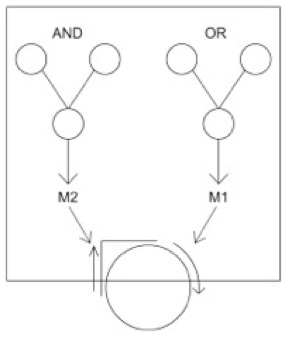

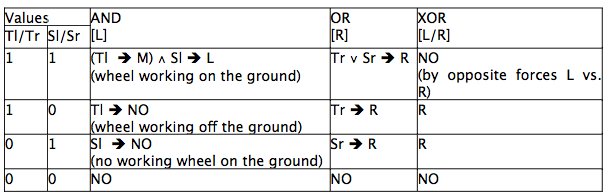

The inputs coming into the robot are two, A and B. One network computes OR and the other computes AND. Each network is connected to a motor. The network computing OR is connected to motor 1 (M1), which turns a single wheel at the center that causes forward motion. AND network is connected to motor 2 (M2), which serves to lift the wheel off the ground. Thus, M1 will activate the wheel if either or both inputs are active. And M2 will raise the wheel off the ground if and only if both inputs are active (see fig. 5). When both inputs A and B are off, both networks output 0, then the wheel is not raised from the ground and it does not move, so the robot is stationary. When input A is active and input B is off, the AND network outputs 0, and then the wheel stays grounded. But the OR network outputs 1 and then M1 causes the wheel to move forward (so the robot moves forward). When B is active, the same thing happens: the AND network delivers 0 and the OR network delivers 1, so the robot moves forward. The interesting case is when A and B are both active. In this case the OR network makes M1 to turn the wheel on, but the AND network lifts the wheel from the ground, so the robot remains stationary. Summarizing the behaviour of the robot in a table, we discover that it looks like the truth table of the XOR function (see fig. 6). The explanation is that “the robot’s behaviour is not simply determined by the output of the neural networks, but also by the actuated components of the body” (Paul, 2004, p. 2).

Paul’s robot, as we said above, is an illustration of morphological computation. The simple physical interactions of the robot’s body with its environment give raise to computation. Under the functionalist reading of the embodied program, this case is an example of what can be dubbed “participant machinery”. This means that the body is “part of the very machinery by means of which mind and cognition are physically realized” (Clark, 2008, p. 207). The body here is considered as (just) the implementational base for the cognitive processes. Because, the functionalist argues, the only difference between the XOR robot and the standard (disembodied) computation of XOR (with a three layered feedforward network) is a difference in the physical structure implementing the function. Morphology in Paul’s robot is playing the same computational role that the hidden unit plays in the XOR network. The robot’s active body “is providing the functional equivalent of the missing second layer of neural processing: the extra processing that would be required to solve the linearly inseparable problem of computing XOR” (Clark, 2008, p. 209). According to the reductionist interpretation, however, the difference between the XOR robot and the standard computation of XOR is not only an implementational difference. The robot and the three layered network, so the reductionist argues, are not performing the same computational processes.

Independently of which reading we choose to be the best, the important lesson to draw from this example is that the robot is, in the same way that evolved biological intelligences are, and unlike how methodologically engineered solutions in (classical, disembodied) artificial systems work, exploiting the possibilities of its physical structure. Thus, we see that we cannot explain the robot’s behaviour by exclusively looking at what its controllers do. The whole agent, its mechanical properties as well, has to be considered in order to understand what it does and why. It is in this line that, we propose, artificial agents should be developed. Designing the proper morphology of our robots will provide us not only with a cheaper and easier control architecture, but more importantly, with a better insight into how living systems adapt to their environment through the exploitation of their physical structure.

4. Previous research: simulations and Lego NXT robots.

Previous to this current research we were working on simulating synthetic emotions, always trying to create very simple situations in which we could elucidate the value of basic emotions (pain and pleasure) for the emergence of complex activities. Our interest in emotions is not only a question of affinity with the topic but the strong belief that emotions are basic intentional forces for living entities. Therefore, emotions should be the keystone of the whole building of AI and robotics. Emotions being a natural system that most living intelligent creatures use to calibrate their relationship with the environment and their own plans and goals, they are key major factors to study when we want to understand how autonomous living systems develop their own goals.

We developed two different computer simulations, which we called TPR and TPR.2.0. Both models and results were published as Vallverdú & Casacuberta (2008) and Vallverdú & Casacuberta (2009a, 2009b), respectively. Let us summarize them.

4.1. TPR.

At the first stage of our research we developed a computer simulation (called The Panic Room or, more simply, TPR) dealing with synthetic emotions. TPR was developed with Python code and led us to interesting results. With TPR, we were merely trying to design an artificial device able to learn from, and interact with, the world by using two basic information types: positive and negative. We were developing the first steps towards an evolutionary machine, defining the key elements involved in the development of complex actions (that is, creating a physical intuitive ontology, from a bottom-up approach). The basic conceptual and technical data of that simulation were:

• Hard-wired emotional states (proto-emotions).

• Bottom-up approach.

• Python programmed.

The computational simulation was designed as a box with four doors (a,d,m,p), three switches (b,h,m), and 16 squares {a,b…p}:

The system simulated a room in which passers-by could walk around randomly. There were three switches distributed around the room. If a user was able to disconnect the three switches in rapid succession then the power was cut to the main computer running the entire environmental construction and the whole system failed. However, if the system was able to detect such an attack in time, it had a few seconds to acquire an alternative source of electricity before the user turned off the final switch. To make the process more interesting, the system did not have access to information about whether the switches had been turned off (pain) or not (pleasure). By means of a deterministic algorithm, one not capable of change through learning we designed the system to distinguish between a harmless and a harmful intruder. Each movement by the user either generated some elevation or reduction of a fear signal. As the fear increased the system checked the signals coming from the more relevant sensors more frequently. Once the signal went beyond a certain threshold, the system entered into “panic mode” and grabbed the alternative source of electricity. When the fear signal descended enough for the system to consider that the danger had passed it returned to its normal activity, getting electricity again from the usual source.

With TPR, we were merely trying to design an artificial device able to learn from, and interact with, the world by using two basic information types: positive and negative. These can be considered as proto-emotions and, assuming we can establish this analogy with human emotions, we could emulate their usefulness in the fight for survival by creating helpful behavioural rules such as “this is harmful, don’t touch it” or “this produces pleasure, eat it”. We were developing the first steps towards an evolutionary machine, defining the key elements involved in the development of complex actions (that is, creating a physical intuitive ontology, from a bottom-up approach).

From the programming perspective, in TPR 1.0 we just used global variables in order to represent the emotional values. That means that the system actually kept ‘memories’ of the former emotional states. This, of course, is somewhat unrealistic, and wanting to pursue a bottom-up approach as much as possible, we decided later (at the next stage of the current research) to change and give the system, now called ‘TPR 2.0.’, a very basic memory instead. The system labelled the signals from the sensors that described the surroundings either as negative or positive. Either option had a specific signal that was used to change the way further perceptual signals would be processed as well to as generate possible behavioural responses to a potential danger. Responses were automatic and embedded (or hard-wired) in the system (therefore, they are an intentional – but not conscious – force). All the computations were based on the relative strengths of the two protoemotional signals. If the negative signal reached a certain threshold it would activate the defensive action and would switch to the emergency circuit. Positive signals tried to calm down the system in order to avoid that reaction.

TPR could easily distinguish between dangerous and innocent situations from its basic emotional structure (using pain and pleasure). Therefore, we showed that emotions, as hardwired conditions of the system, are intentional maps of action that make possible an effective interaction with the world without the necessity for complex programming. At the same time, TPR was able to develop correct escalation responses through [pain-> pain+-> panic] or [pleasure->happiness] states. This reveals that with just two activation signals (pain and pleasure), it was possible to allow the TPR to carry out a coherent survival activity. As a consequence, we concluded that a hardwired approach to ambient intelligence was possible with TPR.

4.2. TPR.2.0

After the successful initial results of TPR, we considered that it would be necessary to develop a new simulation (which we will call TPR 2.0), more complex and with better visualisation characteristics. We developed then a second version, TPR 2.0, using the programming language Processing, with new improvements such as: a better visual interface, a database which could record and also recall easily the information on all the paths inside the simulation (human and automatically generated ones) and, finally, a small memory capacity which was a next step in the evolution from simple hard-wired activities to self-learning by simple experience.

TPR 2.0 was equipped with several simulated components: 4 doors: in/out, 3 switches which activated emotional responses, 4 sensors which detected proximity to switches and movement inside the room and, finally, 4 light devices which showed the emotional state of the room. Like its predecessor, TPR 2.0 automatically evaluated a situation as being either neutral or dangerous, generated an automatic response that dealed with the specific danger detected and could escalate that response. That is, it could make the response stronger or weaker depending on the degree of danger that was detected. The possible generated pathways were:

The process by which TPR 2.0. changes from one state to another can be defined by the following rules, where s is signal, em is echoic memory, + means positive path, – means negative path and relaxed, pain, intense pain, panic are the possible final states (relaxed = 0, pain = 1, intense pain = 2, panic = 3):

If s is + and em is + then relaxed

If s is + and em is – then increment once +1 the state

If s is – and em is + then increment once +1 the state

If s is – and em is – then increment once +1 the state

Let us emphasize that the simulation had a short memory (echoic memory) which could hold the previous state for a small amount of time (+ or – ). Once this time is passed, and in case there is no new signal, the next state is always a positive one (+).

There were two basic differences between TPR and TPR.2.0:

a) Programming Language. The first version was made in Python and this second one is made in Processing. We found that Processing is a lot easier and more powerful when performing an animated version of the simulation. This new programming language approach implies several changes in the general architecture of the simulation (these include better usability across different platforms, more friendly interface and a database).

b) Echoic memory. This is the main theoretical improvement on our first version. In TPR 1.0 we used global variables in order to store the emotional values. Because of this the system kept memories of the former emotional states. TPR 2.0, however, exhibits a very basic memory. After a few seconds the echoic memory degraded and finally vanished, returning to a neutral state as if nothing had happened.

c) Pleasure states deleted. TPR 1.0 also had scalable pleasure states, which have been deleted in our new simulation. TPR 2.0. only has relaxed – pain – intense pain – panic modes. The reason for this change was twofold, (i) we considered pain states to be more basic than pleasure ones (although you can consider the relaxed state to be a positive situation, or with lack of pain. This could be seen as a Buddhist approach to the biological nature of living entities). And (ii) we paid more attention to the necessity of developing a more simple and elegant simulation with fewer elements to be processed, but with a more in-depth analysis.

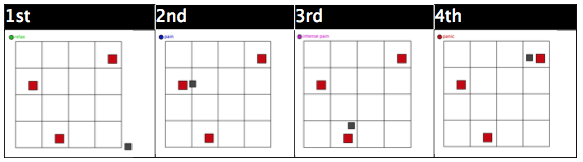

d) Visual interface. TPR 2.0 has a better visual interface (which makes possible the interaction between TPR 2.0 and human beings, as well as the generation of automatic paths). It has a mouse and emotions are labelled with colours and text which appear on the screen as soon as they are generated (i.e. relaxed – green-, pain -blue)- intense pain –purple-, panic -red).

e) Database. TPR 2.0. has a database which can record and also easily recall information on all the paths followed inside the simulation.

In the diagram below several basic screen captures of the simulation are displayed. The reader should notice that in TPR 2.0 the protoemotion is indicated with a word and a colour to make it clearer the usual reactions that occurred during the simulation (from relaxed to pain, intense pain and panic):

Both TPR and TPR 2.0 dealt with ambient intelligence and they are presented here as our first analysis on how there is not a clear-cut distinction between the internal structure of the system and the environment that surrounds it. TPR and TPR 2.0 are good illustrations of the significance of morphology, for the way sensors are distributed within the space are critical. It is the results obtained in these former experiments that led us towards morphological computing. In the next section we will introduce our work on morphological computation with real systems (Lego Mindstorms NXT).

5. Morphological Computing as Cognition: XOR Robots.

After our previous work with computer simulations (TPR and TPR 2.0.) we decided to implement our philosophical model in real robots. We chose Lego Mindstorms NXT for several reasons (you can also see a detailed argumentation in favor of Lego Mindstorms in Dautenhahn et al 2002, Chapter 8), but the most important are:

– Inexpensiveness

– Simple object-oriented programming interface, NXT-G, with the possibility of working with the complete Labview suite (not included with the basic equipment but available for us).

– Easyness of manipulation.

– Availability of different sensors.

– Bluetooth connectivity.

We acquired 6 NXT units for our research activities and we started to implement our models of synthetic emotions into them. Part of this research on the relationship of the somatic marker with proto-emotions is still being developed with other robotic designs. Our robots allowed us to work with the idea of loop, as a biological cybernetic feedback, implemented in their programming. We chose robots with movement to simulate living entities like animals that are looking for food, security, etc. To avoid complexity of typical six or eight legged bugs, we chose wheels as the simplest (but perhaps not always effective) way of movement for our robots. We present here the results on the importance of embodiment for robotics systems, but not in a trivial sense (robots are physical objects), but in the stronger sense we defended in section 3. Robot’s bodies perform certain complex actions. In this sense, we can affirm that not only does the morphology determine the behaviors that can be performed, but also the amount of control required for these behaviors. Particularly in systems where behavior is obtained through purely sensory-motor interactions of the body with the environment, the morphology is of prime importance.

Elaborating on Paul’s notion of morphological computation, we expanded her thought experiment (i.e. the XOR robot we presented above), designing a real NXT robot which computes XOR courtesy of its morphology. Paul’s idea was to compute XOR from AND + OR. The problem we are concerned with here is how to compute it with a real robot. Two possible answers are (a) with a robot controlled by a three layered feedforward network, and (b) with a robot controlled by some logic programming language (for example, LEGO NXT-G software has a Logic Bloc which computes XOR). But, could it be obtained exclusively through its morphology? The answer is ‘definitively, yes!, as we will see in the next section.

5.1. XOR as initial Paul thought experiment.

Following, to a certain degree, the indications provided by Paul (2006), we created this robot, which we call XOR-1.

The structure of the robot is very simple: two pair sensors (one of touch and one of sound) can activate one of the three motors. Right motor moves towards the right side, while left motor moves towards the left. There is a third motor which always keeps off the ground the left motor; if a situation is not produced in which two input sensor values are positive (true or 1), that is, an AND situation, the motor can only work off the ground, therefore remaining quiet. In that case the left motor activates the wheel and it is on the ground moving the robot towards the left side. The right motor is activated if sensors A or B are positive (i.e. function OR). Then, when left motor computes a true AND in all four situations and right motor computes OR in all four situations, then the robot follows a XOR rule. In true-true value, because every motor is trying to move the robot toward opposite directions and then no movement is accomplished at all. We can summarize it with this table:

XOR-1, as we see, computes XOR from AND and OR. With this simple case we see that morphology can be used to encode complex logical design, without the aid of programming or the control of neural nets. But simplicity can be even higher, as we will see in the next section.

5.2. The simplest XOR robot

After achieving the XOR-1 robot we decided to make a simpler design which could compute XOR without including AND or OR (morpho)logical conditions. Then we created the robot called XOR-2.

We have not programmed it for any extra activity, such as “look for food” (e.g. a colour) or “avoid danger” (e.g. a too close object). With this robot we just want to show that a very simple morphological design can control and determine the general behaviour of the robot.

5.3. Adding morphological complexity to the XOR robot.

Our third and last example of the potential of morphological computation, understood as a practical example of the enactive approach to computing and robotics, is XOR-3, an evolution of XOR-2. Imagine a situation in which the touch sensors were automatically hidden in case there is a particular environmental condition. In that case, the robot could only compute XOR in specific situations. XOR-3 hides its two touch sensors in case there is too much light. Thus, it computes XOR only in particular conditions. With this example we see that the particular morphology the system has, together with the environmental structure, is what explains its behaviour.

6. Some philosophical conclusions

We hope that our theoretical discussion has shed some light about alternative ways to consider the design of intelligent beings. More specifically, we tried to show that embodiment means more than simply having a body and that the enactive approach is not compatible with a functionalist view that assumes that mental processes are multiply realizable. To summarize, intelligence implies autonomy, and autonomy implies a serious commitment on how precise morphological structures and ambient traits play a major role in cognitive processes. Within an enactive AI background, some classical theoretical problems can be more easily solved. A good example of this is Dennett’s well known frame problem (Dennett 1984). The core of the problem consists in the fact that a symbolic cognitive system does not have any specific priorities and, in Dennett’s example, is not able to realize that the really important movement is to disconnect the bomb or leave the room as soon as possible. Instead of that, the system goes on generating true but unhelpful statements about the colors or the volume of the room, and so on. However, if we have a system that has both autonomy and adaptivity, the frame problem is no longer a problem, because the system is able to settle its own goals (autonomy) and those goals are realistically coupled with the environment (adaptivity).

Here we proposed three robots as examples of how morphology can play a computational role. Including morphology in computations is a way to minimize the control required. This, however, does not have to be seen as simply an alternative and effective strategy to design artificial agents. The primary lessons of morphological computation are not lessons in cheaper designs, but in the nature of intelligent activities. Natural intelligence is the result of the continuous interplay between the control system, body and environment. By including morphology in our artificial designs we are getting a better insight into natural forms of intelligence.

Acknowledgements

This work was supported by the TECNOCOG research group (at UAB) on Cognition and Technological Environments, [FFI2008-01559/FISO], and has been developed by SETE (Synthetic Emotions in Technological Environments) research group. The Philosophy Department (at UAB) has also provided part of the funding for the acquisition of the NXTs.

References

Bechtel, W. & J. Mundale. (1999). Multiple Realization Revisited: Linking Cognitive and Neural States. Philosophy of Science, 66, 175-207

Bickle, J. (2003). Philosophy and Neuroscience: A ruthlessly reductive account. Dordrecht, The Netherlands: Kluwer Academic Pub.

Bickle, J. (1998). Psychoneural Reduction: The New Wave. Cambridge, MA: MIT Press/Bradford Books.

Block, N. (1996). Functionalism. The Encyclopedia of Philosophy Supplement. New York: Macmillan.

Block, N. & Fodor, J. (1972). What Psychological States Are Not. Philosophical Review, 81, 159-181.

Braitenberg, V. (1984). Vehicles: Experiments in Synthetic Psychology. Cambridge, MA: MIT Press.

Brooks, R. A. (1991). Intelligence without representation. Artificial Intelligence, 47(1-3), 139- 160.

Brooks, R. A. (1999) Cambrian Intelligence: The Early History of the New AI. Cambridge, MA: MIT Press.

Calvo, P. & Symons, J. (2008). Radical Embodiment and Morphological Computation: Against the Autonomy of (some) special sciences. In Reduction and the Special Sciences, Tilburg, April 10-12.

Clark, A. (2006). Pressing the flesh: Exploring a tension in the study of the embodied, embedded mind. Philosophy and Phenomenological Research, 76(1), 37 – 59.

Clark, A. (2007). Curing the Cognitive Hiccup: A Defense of the Extended Mind. Journal of Philosophy, 104(4).

Clark, A. (2008). Supersizing the Mind. Embodiment, Action, and Cognitive Extension. Oxford, UK: Oxford University Press.

Damasio, A. (1994). Descartes’ error: Emotion, Reason and the Human Brain. New York: Putnam Publishing.

Dautenhahn, K., Bond, A.,Cañamero, L. & Edmonds, B. (2002). Playing the Emotion Game with Feelix. What Can a LEGO Robot Tell Us about Emotion? Socially Intelligent Agents Creating Relationships with Computers and Robots. New York: Springer.

Dawinks, R. (1982). The Extended Phenotype. Oxford, UK: Oxford University Press.

Dennett, D. (1984). Cognitive wheels: the frame problem of AI. In Hookway, (ed.) Minds, Machines and Evolution. Cambridge, UK: Cambridge University Press.

Dreyfus, H. L. (1972). What Computers Can’t Do: A Critique of Artificial Reason. New York: Harper and Row.

Dreyfus, H. L. (1992). What Computers Still Can’t Do: A Critique of Artificial Reason, Cambridge, MA: The MIT Press.

Dreyfus, H. L. (2007). Why Heideggerian AI failed and how fixing it would require making it more Heideggerian, Philosophical Psychology, 20(2), 247-268.

Di Paolo, E. A & Izuka, J. (2008). How (not) to model autonomous behaviour. Biosystem, 91(2), 409-423.

Eliasmith, C. (2002). HYPERLINK “http://www.arts.uwaterloo.ca/~celiasmi/Papers/Turing%20Myth.central.jetai.1ce.nofields.html” The myth of the Turing machine: The failings of functionalism and related theses. Journal of Experimental and Theoretical Artificial Intelligence, 14(1), 1-8.

Flanagan, O. (2009). The really hard problem. Meaning in a material world. Cambridge, MA: MIT Press.

Froese, T. & Ziemke, T. (2009). Enactive artificial intelligence: Investigating the systemic organization of life and mind. Artificial Intellgence, 173, 466-500.

Hutchins, E. (1996). Cognition in the wild. Cambridge, MA: MIT Press.

Kant, I. (1790). Kritik der Urteilskraft, [Critique of Judgment], (trans. by: W. S. Pluhar). Indianapolis, IN: Hacket Publishing Company.

Kirsh, D. (1995). The intelligent use of space. Artificial Intelligence, 73(1-2), 31-68.

Kirsh, D. & Maglio, P. (1992). Reaction and reflection in Tetris. In J. Hendler, (ed.) Artificial intelligence planning systems, Proceedings of the first annual conference AIPS. San Mateo, CA: Morgan Kaufmann.

Kirsh, D. & Maglio, P. (1994). On distinguishing epistemic from pragmatic action. Cognitive Science, 18, 513-549.

Maglio, P., Matlock, T., Raphaely, D., Chernicky, B. & Kirsh, D. (1999). Interactive skill in Scrabble. In Proceedings of 21st annual conference of the Cognitive Science Society. Mahwah, NJ: Erlbaum.

Marr, D. (1982). Vision. San Francisco: W.H. Freeman.

Millikan, R. G. (1991). Language, Thought, and Other Biological Categories. Cambridge, MA: MIT Press.

Minksy, M. & Papert, D. (1969). Perceptrons. Cambridge, MA: MIT Press.

Moreno, A. & Etxeberria, A. (2005). Agency in the natural and artificial systems. Artificial Life, 11(1-2), 161-175.

Neth, H. & Payne, S. J. (2002). Thinking by doing? Epistemic actions in the Tower of Hanoi. In W.D. Gray & C. D. Schunn, (Eds.), Proceedings of the 24th annual conference of the Cognitive Science Society. Mahwah, NJ: Erlbaum.

Noë, A. (2004). Action in Perception. Cambridge, MA: MIT Press

O’Regan, J. & Noë A. (2001). A sensory motor approach to vision and visual consciousness. Behavioral and Brain Sciences, 24(5), 939-973.

Paul, C. (2004). Morphology and Computation. In S. Schaal, A. J. Ijspeert, A. Billard, S. Vijayakumar, J. Hallam, and J. A. Meyer, (Eds.), From Animals to animats: Proceedings of the eighth international conference on the Simulation of Adaptive Behaviour, Los Angeles. Cambridge, MA: MIT Press.

Paul, C. (2006). Morphological computation: A basis for the analysis of morphology and control requirements. Robotics and Autonomous Systems, 54(8), 619-630.

Pfeifer, R. & Bongard, J. (2007). How the body shapes the way we think. Cambridge, MA: MIT Pres

Pfeifer, R. & Iida, F. (2005). Morphological computation: Connecting body, brain and environment. Japanese Scientific Monthly, 58(2), 48-54.

Place, U.T. (1956). Is Consciousness a Brain Process? British Journal of Psychology, 47, 44-50.

Polger, T. (2002). Putnam’s Intuition. Philosophical Studies, 109(2), 143-170.

Polger, T. (2004). Natural Minds. Cambridge, MA: The MIT Press.

Putnam, H. (1960). Minds and Machines. In S. Hook (ed.), Dimensions of Mind, (pp. 148–180). New York: University of New York Press.

Putnam, H. (1967). Psychological Predicates. In W. H. Captain & D. D. Merrill (eds.), Art, Mind and Religion, (pp. 37–48). Pittsburgh: University of Pittsburgh Press.

Rosenblatt, F. (1962). Principles of neurodynamics. New York: Spartan Books.

Shagrir, O. (2005). HYPERLINK “http://book303.googlepages.com/Hillary_Putnam.pdf#page=234” The rise and fall of computational functionalism. In Y. Ben-Menahem (ed.), Hilary Putnam (Contemporary Philosophy in Focus). Cambridge, UK: Cambridge University Press.

Shapiro, L. (2000). Multiple Realizations. Journal of Philosophy, 97, 635-64.

Shapiro, L. (2004). The Mind Incarnate. Cambridge, UK: MIT Press.

Thompson, E. (2007). Mind in life. Cambridge, MA: Harvard University Press.

Varela, F. J., Thompson, E. Lutz, A. & Rosch, E. (1991). The embodied Mind: Cognitive Science and Human experience. Cambridge, MA: MIT Press.

Vallverdú, J. & Casacuberta, D. (2008). The Panic Room. On Synthetic Emotions. In A. Briggle, K. Waelbers & P. Brey (eds.), Current Issues in Computing and Philosophy, (pp. 103-115). Amsterdam: IOS Press.

Vallverdú, J. & Casacuberta, D. (2009a). Modelling Hardwired Synthetic Emotions: TPR 2.0. In J. Vallverdú & D. Casacuberta (eds.), Handbook of Research on Synthetic Emotions and Sociable Robotics: New Applications in Affective Computing and Artificial Intelligence, (pp. 103-115). Hershey, PA: IGI Global.

Vallverdú, J. & Casacuberta, D. (eds) (2009b). Handbook of Research on Synthetic Emotions and Sociable Robotics: New Applications in Affective Computing and Artificial Intelligence, (pp. 103-115). Hershey, PA: IGI Global.

Keywords

cognitivism: is the philosophical theory that considers that cognition has to do with algorithms (operating over representations), and, until recently (1980s), AI has been exclusively concerned with finding effective algorithms. Algorithms are platform-free, that is, the same algorithm can be implemented in different physical structures. Algorithmic minds are free from the (constraints of the) body.

enactivism: is a theoretical approach to understanding the mind proposed by Gregory Bateson, Humberto Maturana, Francisco Varela, Eleanor Rosch and Alec McPheters. It emphasizes the way that organisms and the human mind organize themselves by interacting with their environment. It is closely related to situated cognition and embodied cognition, and is presented as an alternative to cognitivism, computationalism and Cartesian dualism.

embodiment: Philosophers, cognitive scientists and artificial intelligence researchers who study embodied cognition and the embodied mind believe that the nature of the human mind is largely determined by the form of the human body. They argue that all aspects of cognition, such as ideas, thoughts, concepts and categories are shaped by aspects of the body. These aspects include the perceptual system, the intuitions that underlie the ability to move, activities and interactions with our environment and the naive understanding of the world that is built into the body and the brain.

morphological computation: is about connecting body, brain and environment. It is an advance within the embodiment approach, in which we consider the effect of morphology, materials, and environment on neural processing, or better, the interplay of all these aspects.

multiple realizability: In the philosophy of mind, the multiple realizability thesis contends that a single mental kind (property, state, event) can be realized by many distinct physical kinds. A common example is pain. Many philosophers have asserted that a wide variety of physical properties, states, or events, sharing no features in common at that level of description, can all realize the same pain. This thesis served as a premise in the most influential argument against early theories that identified mental states with brain states (psychoneural identity theories). The argument has even been employed to challenge the functionalism it initially motivated.

XOR: or ‘exclusive disjunction’. Operation on two logical values, typically the values of two propositions, that produces a value of true if and only if one but not both of its operands is true. Its truth values are: 11 = 0, 10 = 1, 01 = 1, 0 = 0.