Predictive processes in Motor-Sensory interactions

09/07/2025

PI: Iria SanMiguel

Active perception and predictive processing

Everyone is familiar with bistable stimuli like the Rubin vase, which can be perceived either as two faces or a vase. Similar phenomena occur in audition, for example with sound sequences composed of alternating high- and low-frequency tones, which can be either perceived as a single stream of alternating sounds, or as two separate streams, one of each frequency. How is it possible that the same sensory stimulation can give rise to different percepts in different occasions? Such phenomena highlight the fact that perception is not a passive process of registering sensory signals; instead, the world we perceive is an active interpretation of the information that arrives at our senses.

A key factor in this interpretative process is prediction. The brain constantly and automatically formulates predictions regarding the sensory input. Sensory responses, and hence perception, are influenced by such predictions (Bendixen, SanMiguel & Schröger, 2012, Int J Psychophys). Sensory predictions can arise from many sources. We can automatically extract information from sound patterns, as when we synchronize our dancing to the beat of the music; we can anticipate sounds based on visual information, as when we protect our ears in anticipation of the slamming of a door by the wind; or we can simply predict the continuation of a song we´ve heard before based on long-term memory. We are interested in understanding the neural mechanisms that support predictive processing, and the effects of such neural mechanisms in perception.

Why can´t you tickle yourself?

A particularly special kind of sensory predictions arise from our own motor behaviour. This is because efficient sensory processing necessarily needs to take into account the sensory consequences of the organisms’ motor actions. How is it possible that the visual image remains still, despite we are constantly moving our body and our eyes? The solution lies in predictive processing: with every motor command, a predictive representation of the sensory consequences of the motor act is sent to sensory areas, where it is matched to the sensory input received, cancelling its effects. This is also the proposed mechanism as to why we can´t tickle ourselves: the sensations caused by self-generated tactile stimulation are partially cancelled out by automatically produced sensory predictions of the consequences of our own actions (Blakemore et al. 2000, NeuroReport).

Therefore, motor commands are a powerful source of sensory predictions. In our research, we make use of this to study predictive processing in audition, asking participants to deliver auditory stimuli to themselves by pressing a button. Sensory responses elicited by self-delivered sounds are attenuated compared to the responses to the same sounds when they are not delivered by the participant him or herself (SanMiguel et al. 2013, Physhophys; Timm et al. 2013, BMC Neurosci).

Hearing silences

Using this kind of experimental task, we can also look at brain responses elicited when the sound is unexpectedly omitted after the button press. This should provide some insights as to the form that sensory predictions adopt in the brain. We have observed that when an expected sound is omitted, the brain responds in a similar manner to when the sound is actually presented (SanMiguel et al. 2013, J Neurosci; SanMiguel et al. 2013, Front Hum Neuro).

Figure 1. Schematic neural mechanisms supporting predictive processing in the brain. The diagrams represent the transmission of information between a higher and a lower sensory processing area under different situations. On the left, when no clear time-locked prediction is available, the exogenous response elicited by the sensory input to the lower level is bottom-up transmitted to the higher level. In the middle, when a time-locked prediction is available, the predicted activation is induced in the lower level via top-down signals. This activation is matched by the input and the sensory response (the information transmitted bottom-up to the higher level) is minimized, causing attenuated sensations. On the right, the prediction is available but the predicted input is then omitted. In this case, the predicted response is bottom-up transmitted as prediction error, eliciting an omission response. Adapted from SanMiguel et al. 2013, J Neurosci.

Figure 2. This animation shows the processing of a sound while listening passively (right) vs. the processing of a highly anticipated but omitted sound (left). Electrophysiological responses can be appreciated in both cases in auditory areas at the typical latencies of auditory responses (50-150 ms). Animation created by Martin Reiche.

Did I do that? Motor-sensory predictions and the sense of agency

How do we recognize ourselves as the agents of certain stimuli in the environment, when there is nothing that can differentiate such stimuli from other stimuli that we did not cause ourselves? The sensation of agency may arise from the effects on sensory responses that are caused by motor-driven sensory predictions. That is, whenever a sensation is cancelled out by a motor prediction, we may feel that we were the agent causing the stimulation. We are interested in understanding the precise relationship between motor-driven sensory predictions and the sensation of agency.

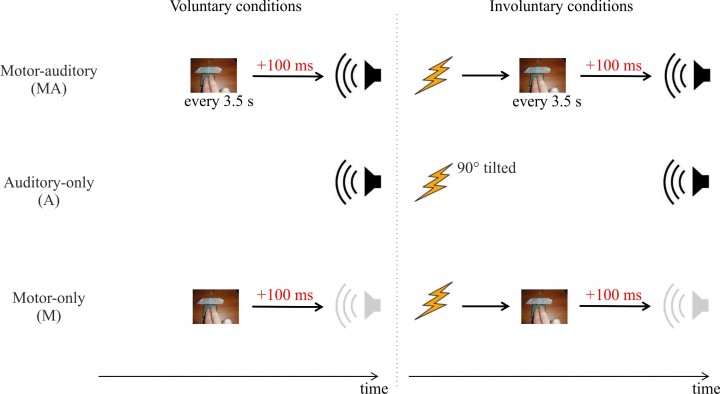

Figure 3. Is there a difference between the brain responses elicited by stimuli we cause voluntarily, hence feeling the agent of such stimuli, and those we cause involuntarily? In one study, we used single-pulse TMS to elicit an involuntary button press, which in turn triggered a sound. We compared brain responses to those sounds, to the brain responses elicited when the sounds were triggered by the participants voluntarily pressing the button. Electrophysiological brain responses to sounds were attenuated only when participants voluntarily pressed the button to trigger the sound. Adapted from Timm et al. 2014, J Cogn Neurosci.